YouTube has revolutionized how people discover and consume videos, becoming one of the primary news sources for Internet users. Since content on YouTube is generated by its users, the platform is particularly vulnerable to misinformative and conspiratorial videos. Even worse, the role played by YouTube’s recommendation algorithm in unwittingly promoting questionable content is not well understood and could potentially make the problem even worse. This can have dire real-world consequences, especially when pseudoscientific content is promoted to users at critical times, e.g., during the COVID-19 pandemic.

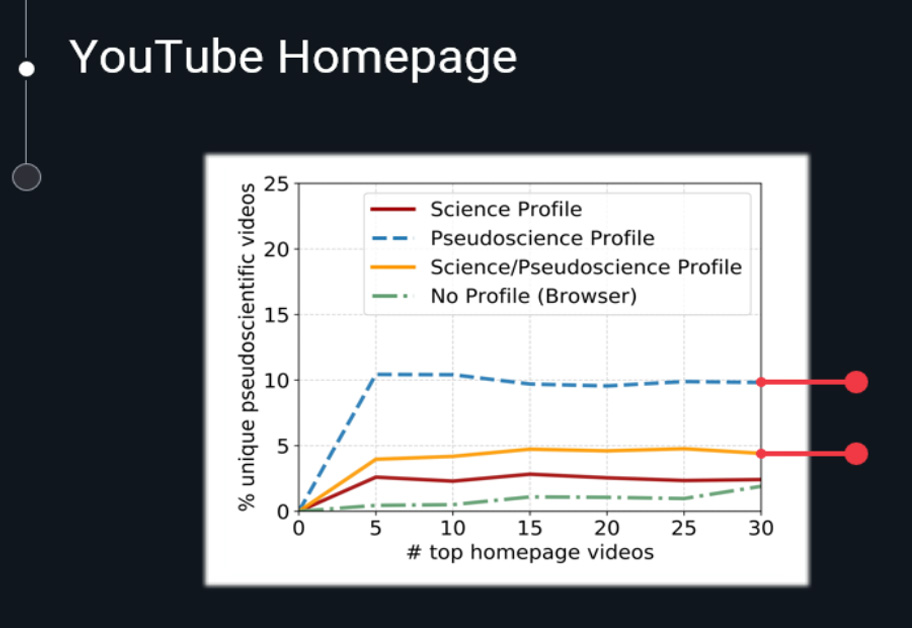

In our work with the title “”It is just a flu”: Assessing the Effect of Watch History on YouTube’s Pseudoscientific Video Recommendations” (https://arxiv.org/abs/2010.11638), we set out to characterize and detect pseudoscientific misinformation on YouTube. We collect 6.6K videos related to COVID-19, the flat earth theory, the anti-vaccination, and anti-mask movements; using crowd–sourcing, we annotate them as pseudoscience, legitimate science, or irrelevant. We then train a deep learning classifier to detect pseudoscientific videos with an accuracy of 76.1%. Next, we quantify user exposure to this content on various parts of the platform (i.e., a user’s homepage, recommended videos while watching a specific video, or search results) and how this exposure changes based on the user’s watch history.

We find that YouTube’s recommendation algorithm is more aggressive in suggesting pseudoscientific content when users are searching for specific topics, while these recommendations are less common on a user’s homepage or when actively watching pseudoscientific videos. Finally, we shed light on how a user’s watch history substantially affects the type of recommended videos.

Funding from:

CONCORDIA project (Grant Agreement No. 830927)

CYberSafety II project (Grant Agreement No. 1614254)